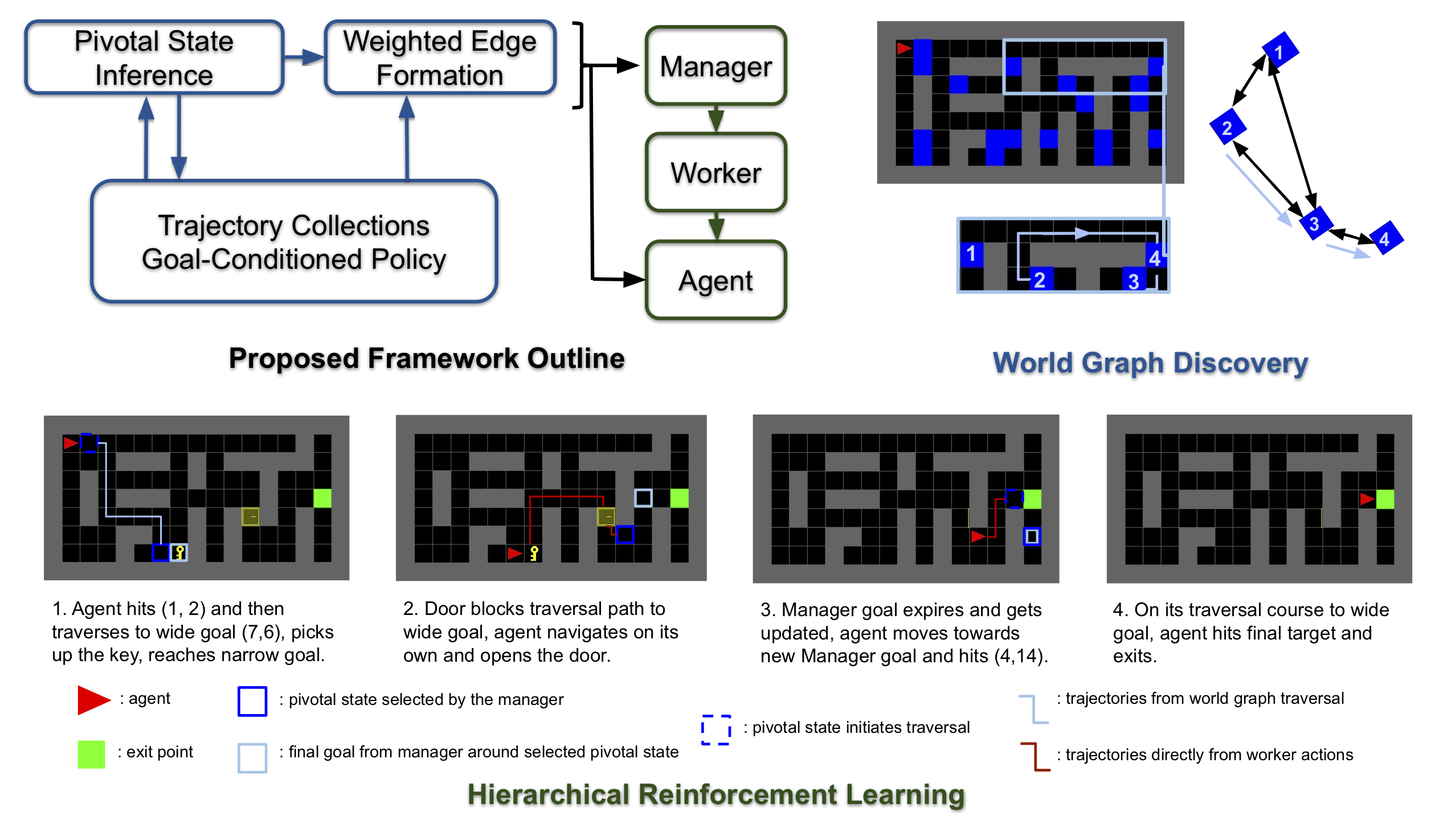

An autonomous agent often counters various tasks within a single complex environment. Our two-stage framework proposes to first build a simple directed weighted graph abstraction over the world in an unsupervised task-agnostic manner and then to accelerate the hierarchical reinforcement learning of a diversity of downstream tasks. Details please refer to Paper with Appendix.

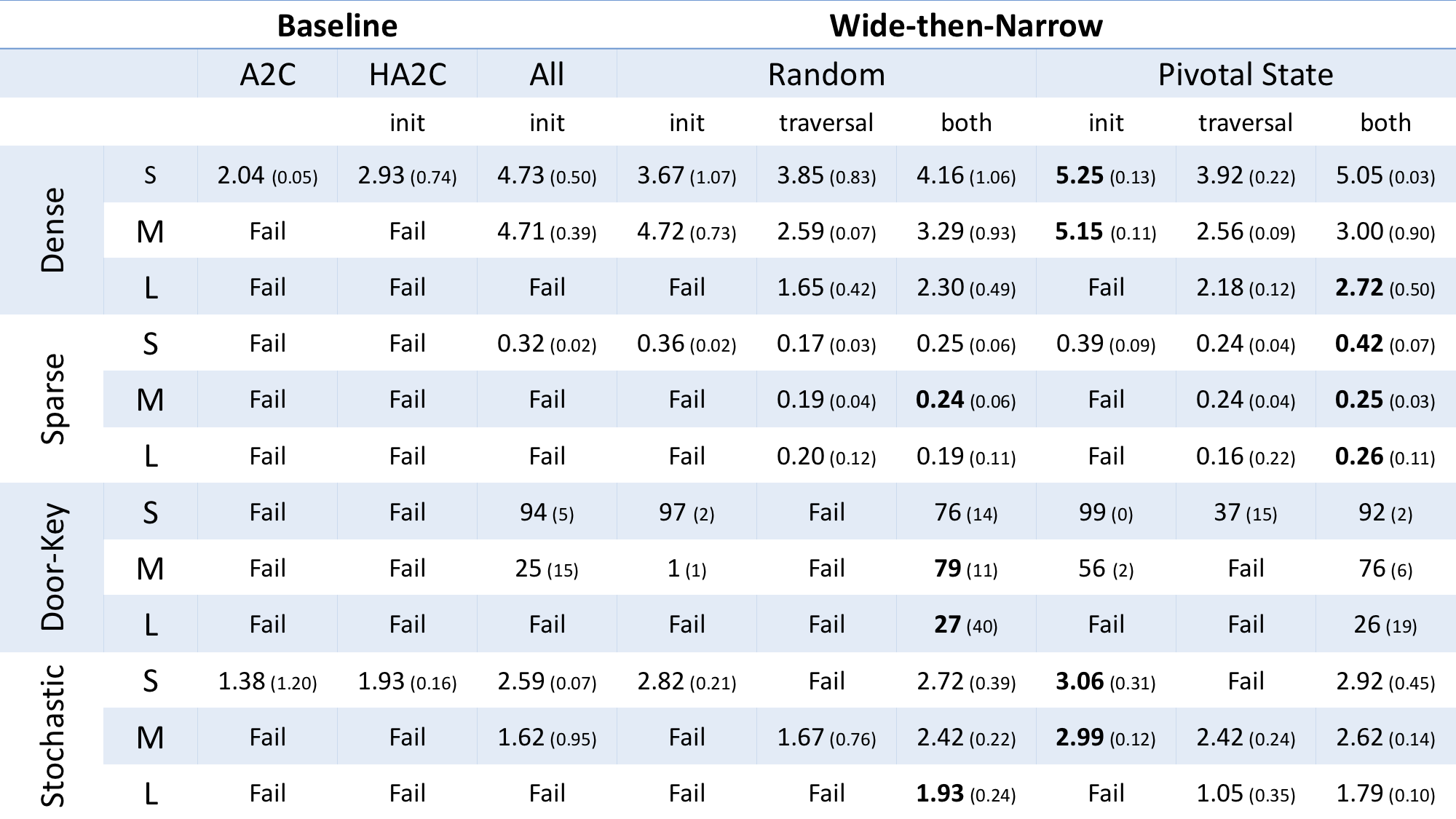

A2C |

FN |

All Feasible States |

Random States |

Pivotal States |

Random States |

Pivotal States |

Random States |

Pivotal States |

A2C |

FN |

Proposed |

All Feasible States |

Pivotal States |

Random States |

with Goal-Conditioned Policy Initialization |

without Goal-Conditioned Policy Initialization |

with Goal-Conditioned Policy Initialization |

without Goal-Conditioned Policy Initialization |