|

I am a CSE PhD candidate in the Graph Exploration and Mining at Scale (GEMS) Lab at the University of Michigan, where I am fortunate to be advised by Prof. Danai Koutra. I also often collaborate with Dr. Jay Thiagarajan at Lawrence Livermore National Laboratory. I am broadly interested in understanding how self-supervised learning can be performed effectively and reliably for non-euclidean and graph data by incorporating domain invariances and designing grounded algorithms. My recent work has focused on understanding the role of data augmentations in graph contrastive learning. Email / CV / Google Scholar |

|

|

|

|

|

Puja Trivedi, Danai Koutra, Jay J. Thiagarajan International Conference on Learning Representations (ICLR), 2023 bibtex / arXiv / Code We study how adaptation protocols can induce safe and effective generalization on downstream tasks through the lens of feature distortion and simplicity bias. |

|

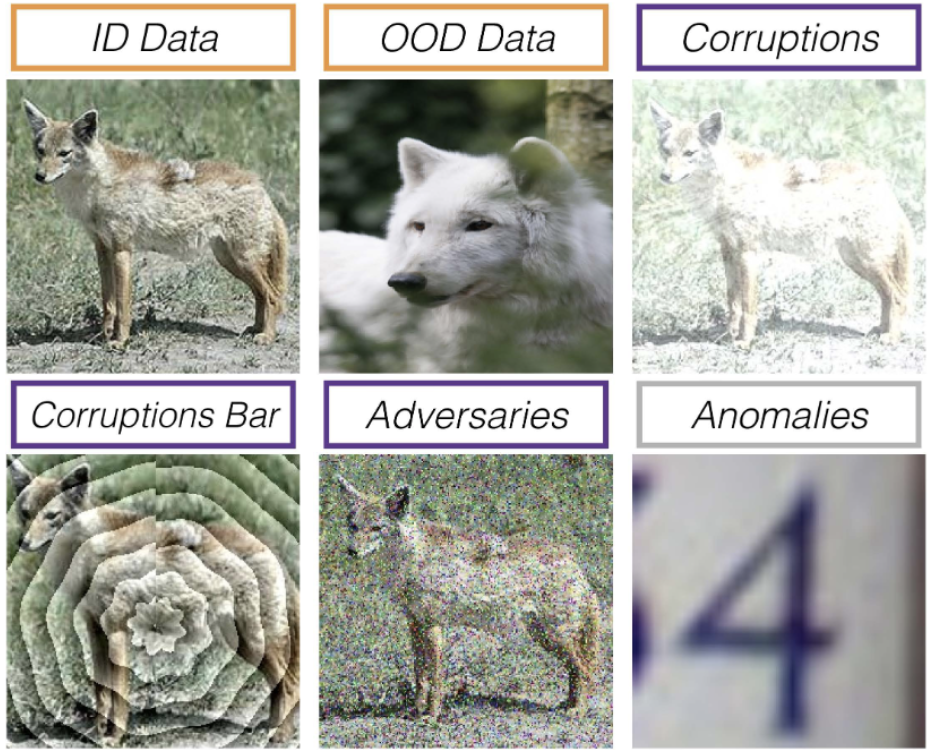

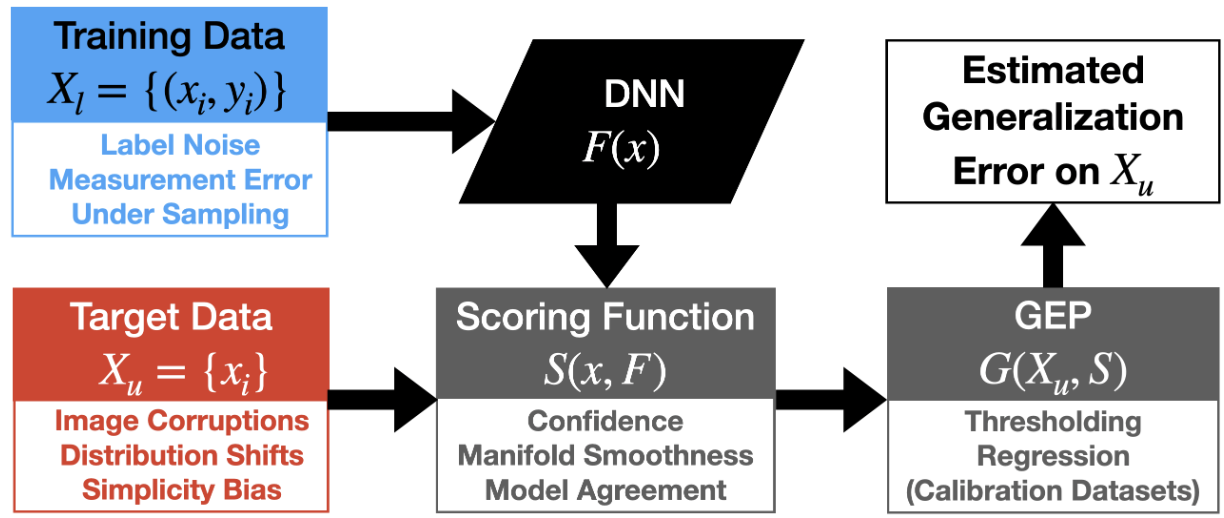

Puja Trivedi, Danai Koutra, Jay J. Thiagarajan International Conference on Acoustics, Speech, and Signal Processing (ICASSP), 2023 bibtex / arXiv / Code We rigorously study the effectiveness of popular scoring functions under distribution shifts and corruptions |

|

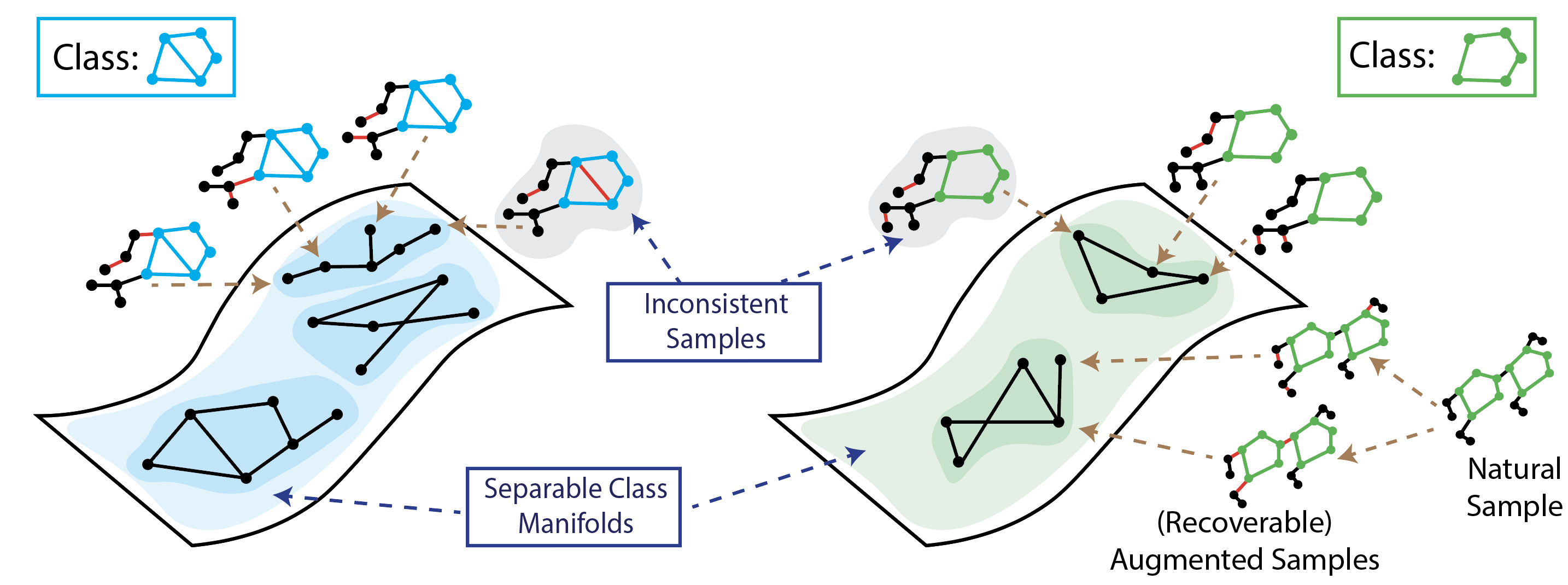

Puja Trivedi, Ekdeep Singh Lubana, Mark Heimann, Danai Koutra, and Jay J. Thiagarajan Advances in Neural Information Processing Systems (NeurIPS), 2022 bibtex / arXiv / Code We provide a novel generalization analysis for graph contrastive learning with popularly used, generic graph augmentations. Our analysis identifies several limitations in current self-supervised graph learning practices. |

|

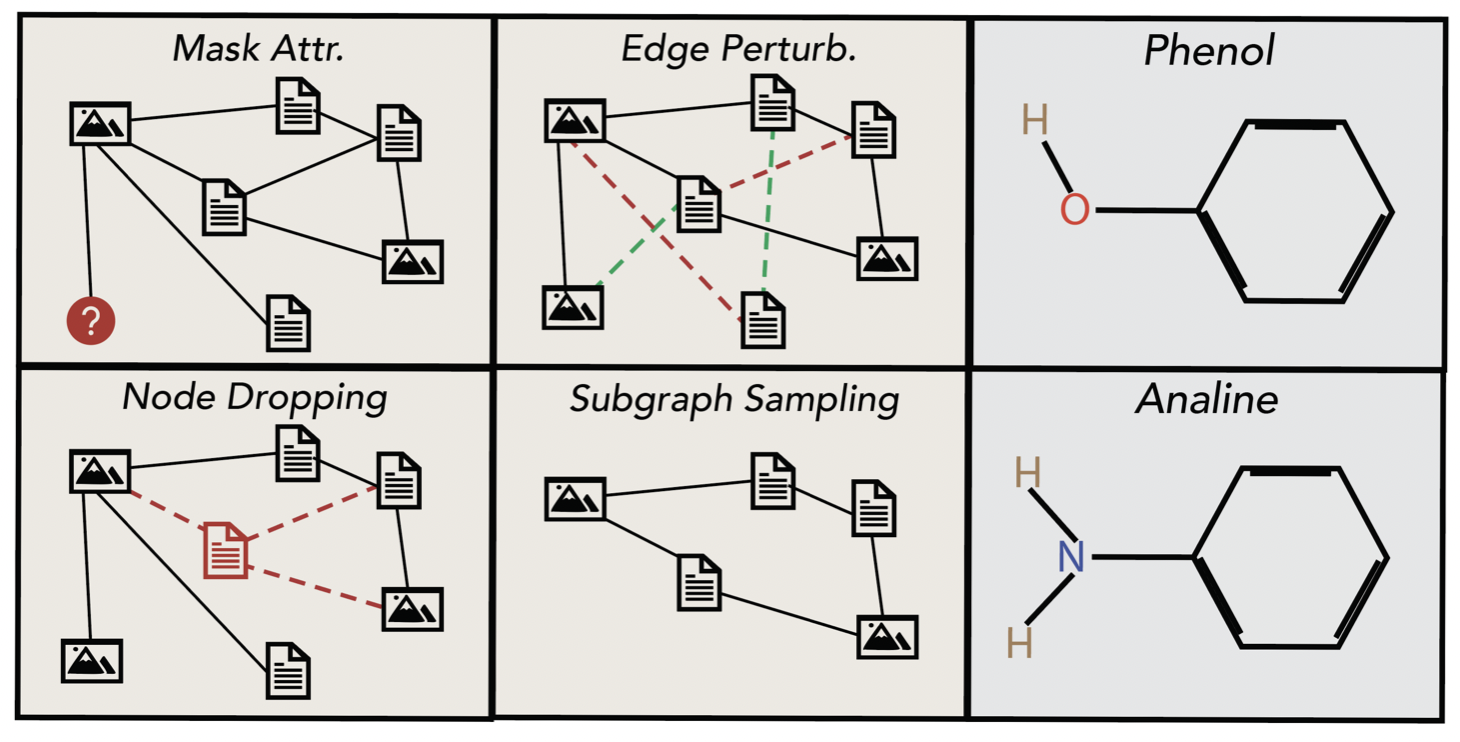

Puja Trivedi, Ekdeep Singh Lubana, Yujun Yan, Yaoqing Yang, and Danai Koutra ACM The Web Conference (formerly WWW), 2022 bibtex / arXiv / Video We contextualize the performance of several unsupervised graph representation learning methods with respect to inductive bias of GNNs and show significant improvements by using structured augmentations defined by task-relevance. |

|

Website Design from: here. |