Project Bella

Week 5 :')

It was hard to get much of anything done this week between going to Detroit to renew my passport, finishing some prototyping work for Project Blue, scrambling to get started on the third EECS 485 project, pretending that I don't have an exam tomorrow morning in a class I've never attended, and falling into a stubborn and unexpected three-day funk. I was also making sure, after the disappointing performance of last week's foraging agent, to spend more time thinking about whether or not an effort was worthwhile before putting it in.

Whatever results from this endeavor will be at best a proof of concept demonstrating the potential value in centering an entire game around an intelligent agent, or some central machine-learning feature (that cannot yet be done any other way). I've been focusing on creating various brains to improve the realism of the game's titular character for whom I ultimately intend to find or create evocative art, animation, and audio. As I was reminded by a couple of the people I have interviewed, these aesthetic components are typically sufficient (and maybe sometimes necessary) to create an emotional response in players. Further, the brains I've been training were only intended to express themselves in full when the player does not have control of the main character, and sitting around and watching is not something I'd like to be a core mechanic of my game. I figured that, if an intelligent agent is going to be the main focus of my game, its intelligence should be more directly related to gameplay.

So I essentially settled on trying to create a path-following agent. This probably seems like a very dumb idea given the existence of path-finding algorithms. A* works for top-down 2D games, and there are adaptations of A* for grid-based 2D platformers. Additionally, Theta* is an any-angle path-finding algorithm that can work for grid-based 2D platformers. I can also just create a navmesh in Unity, but none of these options are satisfactory. The idea is to create an agent that can look at a series of waypoints drawn out by the user and use them as a guide to navigate from source to destination. Perhaps the agent finds a different way to get there; perhaps your path is rife with hazards and the agent chooses to disobey altogether. This would hopefully make the user much more engaged with the core mechanic as they must directly negotiate with the agent brain to move to their target location.

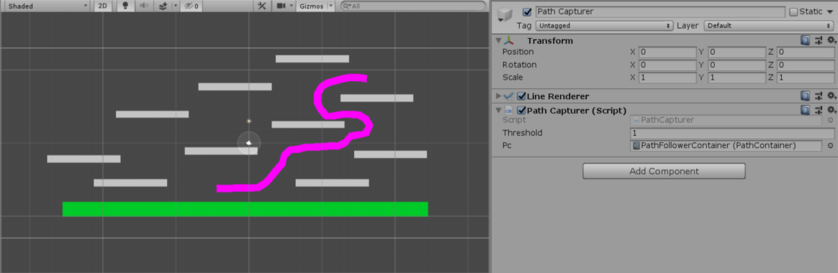

To this end, I created a scene with the training arena for the purpose of drawing and saving paths for training. In this scene, there's a game object with a line renderer and a script that displays a user-drawn path in the arena, and then saves the waypoints to a scriptable object. The scriptable object (called "PathFollowerContainer" in the image below), is essentially a list of lists of Vector3's. It contains all the paths drawn by the user in a single play session.

In another scene, the agent, environment, and academy are set up. The training environment has access to the scriptable object containing all the paths. On every training session, it randomly grabs a path for the agent to follow, and generates a trigger collider at every waypoint. This is because the agent uses spherecasts for eyes, and so placing trigger colliders along the path allows the agent to physically see the path without interacting with it. At the end of a session, these triggers are destroyed, and the process starts anew.

I was unsurprised to find that the agent was doing decently (able to scale platforms to reach the destination) after just some 20k iterations; however, despite being severely punished for jumping (so as to encourage jumping only when necessary), it started hopping around gratuitously after several thousand more iterations.

As has been my experience so far, getting this agent to learn better will require careful engineering of the rewards and punishments. I'm also interesting in using stacked vector observations (meaning vector observations from the previous n time stamps are passed along with the current one) and recurrent neural networks, both of which are easily supported by ML-Agents, to give the agent "memory" so that it can have a sense of where the destination is even if it's strayed from the original path. These are things I hope to explore, and this time hopefully with some promising success, in the coming week.