Project Bella

ILL THINK OF A CREATIVE TITLE EVENTUALLY SORRY

This week, I continued experimenting with the ML-Agents penguin tutorial. I made quite a few changes before attempting to train again: I increased the maximum number of training steps to one million (up from 50 thousand), increased the starting number of fish in the pool to 11 (up from four), and added a reward/punishment that varies Joundoom's closeness to Joundour upon eating a fish: slightly negative if she is far from Joundour, and increasingly positive if she is close. The final list of rewards was as follows:

- A reward of 1 for eating a fish

- A reward of 1 for delivering the fish to Joundour

- A very small punishment every step for idling

- A reward or punishment depending on how far she was from Joundour after retrieving a fish

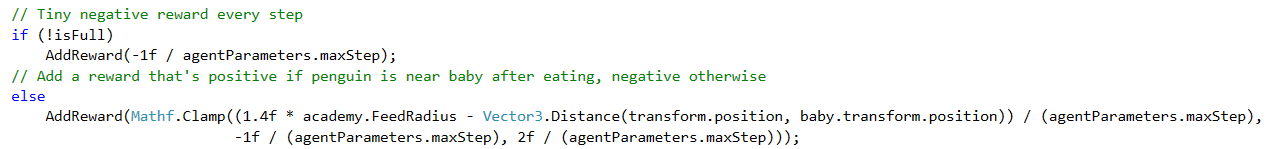

But after just eight thousand training steps, I noticed that she was avoiding the water and the fish altogether. I reasoned that this was because the punishment for being far from Joundour after picking up a fish was initially more severe than the punishment just for idling. To fix this, I capped the punishment for being far from Joundour after picking up a fish at the same value as the idling punishment. The final calculation of this reward/punishment was pleasantly seasoned with magic numbers /s:

After some hundred thousand steps, I was excited to find that Joundoom was avoiding walls and making broad, sweeping circles in what appeared to be an effort to maximize her chances of getting a fish, but when I tested the resultant network, I realized I had given her too much credit: she was in fact just happily swimming in circles.

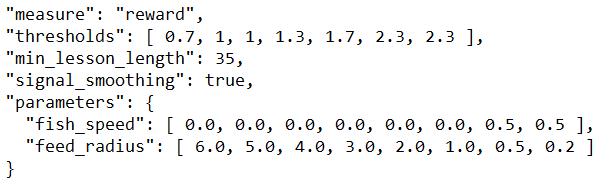

I also noticed that she would often not be able to get back to the shore if she did manage to nab a fish by chance. To fix these two issues, I gave the shore its own collider and tagged it as "beach" (it was originally part of the same collider as the rocks and arena walls and tagged as "walls"). I then added this tag to her Ray Perception Sensor Component 3D so she could see it. I also provided a curriculum for training which deviated slightly from the curriculum provided in the tutorial. A curriculum is a series difficulty parameters, called lessons, applied to a training session. The agent's accrued reward is averaged over previous training steps. Once this average passes a threshold, the agent completes a lesson, and the difficulty parameters are modified to reflect the new lesson. Joundoom's curriculum was as follows:

The feed radius is how close (in meters) Joundoom needed to bring a fish to Joundour for the latter to consume it. This curriculum basically states that, after 35 thousand steps of the same lesson, the training module repeatedly checks whether or not Joundoom's reward averaged reward over previous steps has exceeded the current threshold value. If it has, Joundoom progresses to the next lesson.

Three hundred thousand steps after making all these modifications, Joundoom was catching and delivering every fish in the pond with time to spare before the academy reset the environment. The penguin tutorial man achieved this level of sucess after only 35 thousand steps, but he was also training eight penguins in parallel. On every fixed update, the academy takes a step, as does every agent in the scene. I'm pretty sure this means the academy takes the same number of steps regardless of how many agents are training, so Joundoom achieved proficiency in the expected amount of time. Eight agents reaching proficiency in 35 thousand academy steps is equivalent to one reaching proficiency in 280 thousand.

I gave Joundoom a GoPro and recorded her progress:

To signify to us that Joundoom was successful, a little heart appears over Joundour's head whenever Joundoom brings her a fish.

With this experimental mini-endeavor brought to an end, I started to think in broad terms about what kinds of behavior I might want out of Bella, and how I might be able to achieve it as minimalistically as possible through ML-Agents. Out of the behaviors I brainstormed, including playing, foraging, hunting/pursuing, fleeing, and idling, I settled on trying out foraging (although I'm REALLY excited to try hunting), especially because the UnitySDK project provided by ML-Agents includes an example with this objective called "FoodCollector"; however, Bella will be a 2D game... So I probably, hopefully, will not need 256-node hidden layers just for foraging.

What kind of data is necessary for successful foraging? Primarily scent, and visual data. The ML-Agents toolkit already defines a Ray Perception Sensor Component 2D which is used as the "eyes" of an agent if necessary, so I can use that to retrieve visual data. I figured I could try modeling scent data after this component as well, but there's an issue with scent data that makes raycasts an inappropriate for collecting it: you can detect a scent with your eyes closed.

I have some ideas about how I want scent data to work: I want the granularity of the scent's location to increase as the agent gets closer. I also have some ideas for how to communicate the scent data, such as creating a ScentedObject script on the relevant objects and having them register a callback with a component on the agent, and setting a maximum number of scents that the agent can detect at once. I'm also considering creating my own sensor component like the Ray Perception Sensor Component 2D, and calling it...

Scentsor

Very clever, I know.

I'm really excited to start working on the various parts of Bella's brain. In the coming week, I hope to produce an agent that can forage successfully using scent data alone, and maybe, if I have the time, teach Bella to hunt using a multi-agent environment.