Select Projects

SmartCarts: Autonomous Local Transportation

This project uses a 3D-printed autonomous "golf cart" to transport riders

from various known locations. We're hoping to get a fleet of these in

order to study various problems that arise in request and pick-up

scheduling, route-planning, multi-vehicle systems, and human interaction.

Initial work done at

Mcity included a system in which a user could request a pick-up,

the autonomous vehicle would navigate to an arranged location, and then

drive the user to their selected destination while providing information

about the route and the vehicle's expected actions.

You can see a trailer for the segment Daily Planet filmed

here!

(Full video available on their website for paid subscribers.)

This project uses a 3D-printed autonomous "golf cart" to transport riders

from various known locations. We're hoping to get a fleet of these in

order to study various problems that arise in request and pick-up

scheduling, route-planning, multi-vehicle systems, and human interaction.

Initial work done at

Mcity included a system in which a user could request a pick-up,

the autonomous vehicle would navigate to an arranged location, and then

drive the user to their selected destination while providing information

about the route and the vehicle's expected actions.

You can see a trailer for the segment Daily Planet filmed

here!

(Full video available on their website for paid subscribers.)

360° Emergency Stop Sensor

Our ground robots need to be able to operate autonomously as well as by

human-driver in regions filled wtih both positive and negative obstacles.

A lidar, mounted at an angle on top of the robot, sweeps an ovular shape

around the robot and tests where it's safe for the robot to drive based

on a short history of previous scans. This allows the robot to identify

hills and ramps with reasonable grades to drive on but keeps it from

turning or backing into stairs or other obstacles.

Our ground robots need to be able to operate autonomously as well as by

human-driver in regions filled wtih both positive and negative obstacles.

A lidar, mounted at an angle on top of the robot, sweeps an ovular shape

around the robot and tests where it's safe for the robot to drive based

on a short history of previous scans. This allows the robot to identify

hills and ramps with reasonable grades to drive on but keeps it from

turning or backing into stairs or other obstacles.

Affordance Recognition

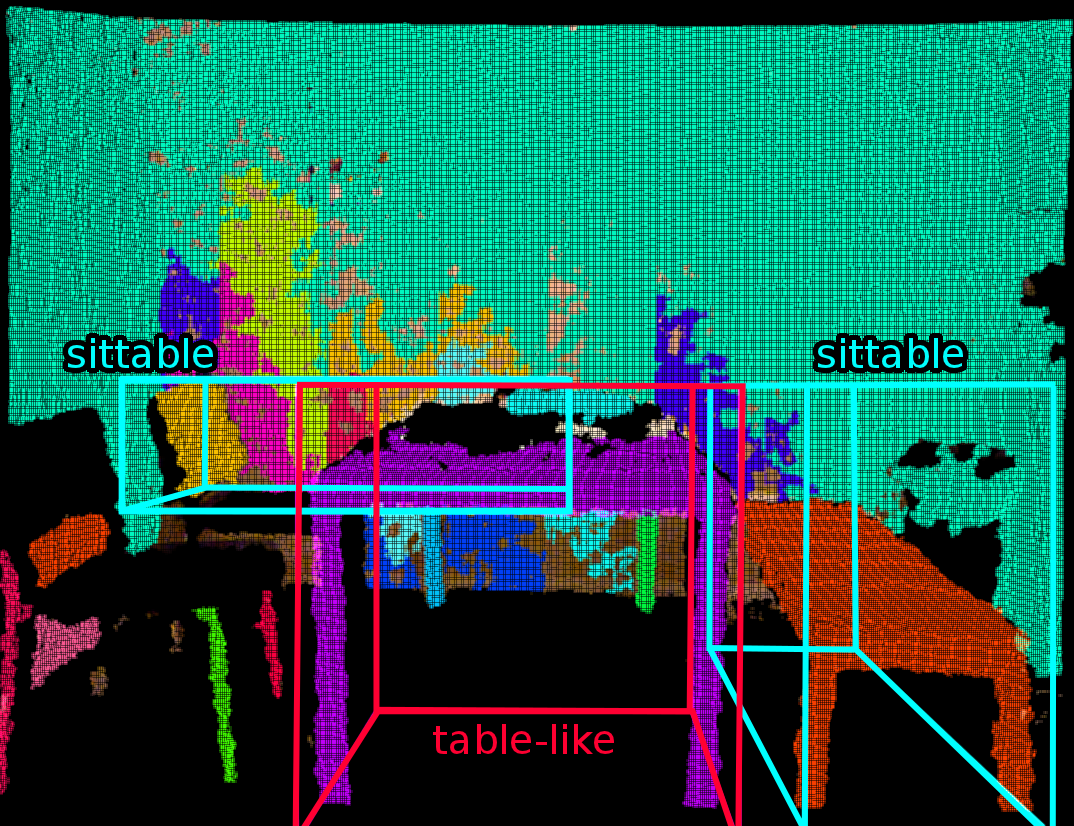

Unlike most affordance-learning systems, we hypothesized that some types of

functionality could be predicted using simulation instead of interaction.

We created a simulation using a physics-based model to explore how what

types of features can be extracted from a 3D pointcloud of an object using

simulated interactions. In particular, we focused on how raining spheres of

varying size onto an object and considering the resulting distribution of

how they settle. We were able to distinguish such affordances as "sittable",

"cup-like", "table-like", etc.

Look at my

papers for

more information.

Unlike most affordance-learning systems, we hypothesized that some types of

functionality could be predicted using simulation instead of interaction.

We created a simulation using a physics-based model to explore how what

types of features can be extracted from a 3D pointcloud of an object using

simulated interactions. In particular, we focused on how raining spheres of

varying size onto an object and considering the resulting distribution of

how they settle. We were able to distinguish such affordances as "sittable",

"cup-like", "table-like", etc.

Look at my

papers for

more information.

BOLT - Broad Operational Language Translation

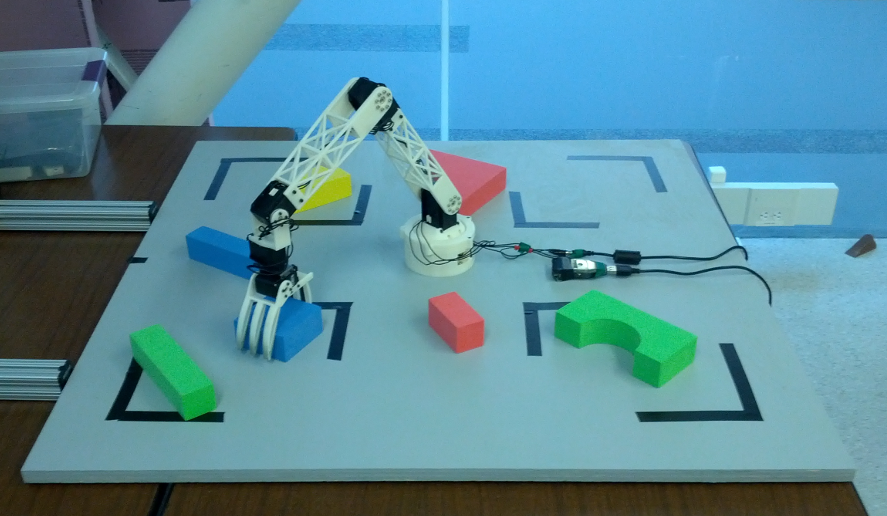

The goal of the Broad Operational Language Translation (BOLT) project,

was to ground language in sensory data from the real world using an

interactive robotic system. Our system uses a robotic arm, a Kinect for

RGBD data, and the SOAR cognitive architecture to learn about its

environment through interaction with a mentor. The robot learns relevant

object properties by seeing, touching, and moving the object. Interaction

with a human mentor helps the robot connect human language to the

properties it has discovered. The work focused on the mechanics of

getting a physical system working, communication and information sharing

between a cognitive architecture and a Bayesian reasoning system, and

learning from minimal examples.

The goal of the Broad Operational Language Translation (BOLT) project,

was to ground language in sensory data from the real world using an

interactive robotic system. Our system uses a robotic arm, a Kinect for

RGBD data, and the SOAR cognitive architecture to learn about its

environment through interaction with a mentor. The robot learns relevant

object properties by seeing, touching, and moving the object. Interaction

with a human mentor helps the robot connect human language to the

properties it has discovered. The work focused on the mechanics of

getting a physical system working, communication and information sharing

between a cognitive architecture and a Bayesian reasoning system, and

learning from minimal examples.

Soft-Keyboard Design

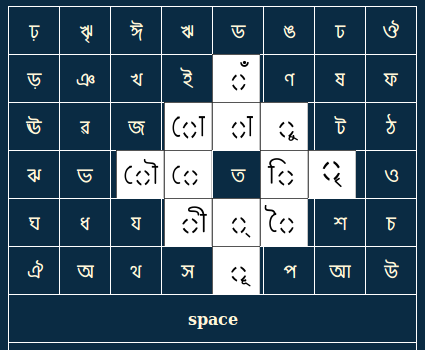

Soft-keyboards, those used on phones, tablets, or other touch-screens,

have the benefit of being easily modifiable for different languages so

that people can type optimally. This research, done at UCCS, explored

how optimal keyboards for languages with several times the number of

characters as English (including diacritics) can be designed. The work

focused on using genetic algorithms for Brahmic scripts, although other

optimization techniques and languages were also tested. This work

included both theoretic and experimental results obtained with users

around the world. Look at my

papers for

more information.

Soft-keyboards, those used on phones, tablets, or other touch-screens,

have the benefit of being easily modifiable for different languages so

that people can type optimally. This research, done at UCCS, explored

how optimal keyboards for languages with several times the number of

characters as English (including diacritics) can be designed. The work

focused on using genetic algorithms for Brahmic scripts, although other

optimization techniques and languages were also tested. This work

included both theoretic and experimental results obtained with users

around the world. Look at my

papers for

more information.

Neural Net Backgammon

My senior year project at Colorado College was a replication of several

papers on TD-Gammon by Gerald Tesauro. TD-Gammon uses Temporal-Difference

learning (a type of reinforcement learning) on a neural network to teach a

computer to play backgammon by repeatedly playing itself and receiving

positive or negative feeback at the end of the game based on whether it

won. It was quite the learning experience for me, as I wrote everything

from scratch. Ultimately, my version of TD-Gammon was able to regularly

beat me. But then I don't claim to be particularly savvy at backgammon.

My senior year project at Colorado College was a replication of several

papers on TD-Gammon by Gerald Tesauro. TD-Gammon uses Temporal-Difference

learning (a type of reinforcement learning) on a neural network to teach a

computer to play backgammon by repeatedly playing itself and receiving

positive or negative feeback at the end of the game based on whether it

won. It was quite the learning experience for me, as I wrote everything

from scratch. Ultimately, my version of TD-Gammon was able to regularly

beat me. But then I don't claim to be particularly savvy at backgammon.