What The...

What The...

What's Up? I'm a Research Professor in the Survey Research Center at the Institute for Social Research on the University of Michigan-Ann Arbor campus. I have a joint appointment as a Research Professor in the U of M Department of Biostatistics as well. I am an Associate Director of the Health and Retirement Study, and I also serve on the Technical Advisory Committee of the Bureau of Labor Statistics. I have a PhD from the Michigan Program in Survey and Data Science, and both a Masters Degree in Applied Statistics and a Bachelors Degree in Statistics from the U of M Department of Statistics.

CV

Books

Peer-Reviewed Journal Articles

Contributions to Edited Volumes

NIH Bibliography

GitHub

Research Statement

Teaching and Mentoring Statement

Project Management Statement

Service Statement

March Madness (Updated!)

Bowl Madness

Music

Current Courses

SURVMETH 687: Applications of Statistical Modeling, Fall 2023

Community Service

The University of Michigan Circle K

The Detroit Partnership

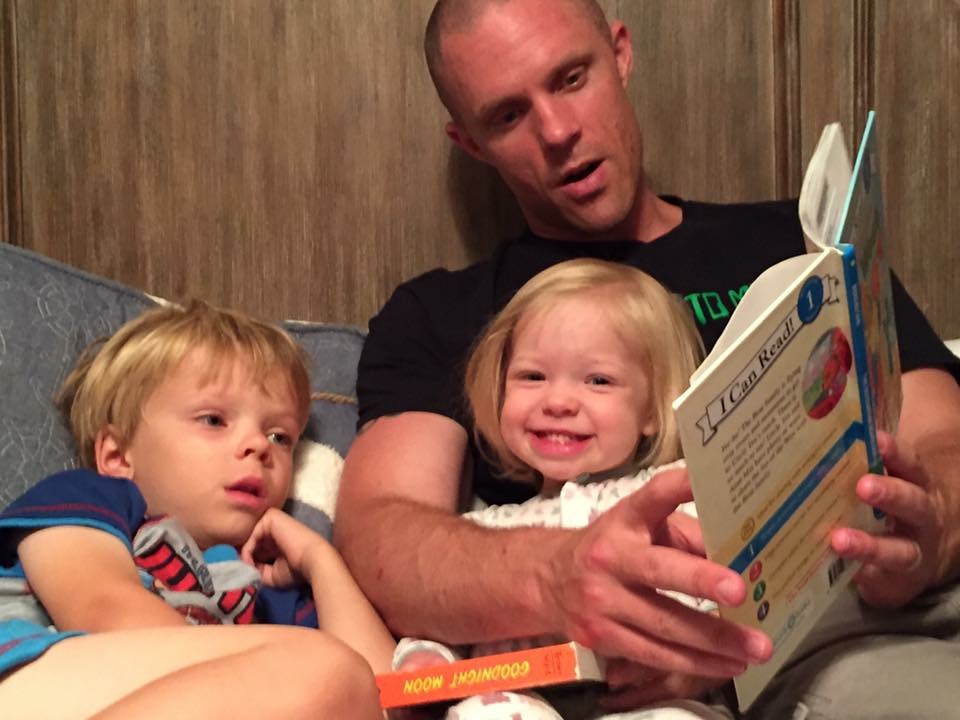

K-Grams =)

Random Stuff

Click here to see a picture of me and my wife Laura! =)

billiards.com

Bananas: IM Campus Champs!

Goin' To Work!

Good drops.

This page is constantly under construction, so visit again soon!

Last modified 11/1/2023 by Brady T. West